Ladies, your mission is simple yet dangerous. The enemy is upon us … maybe. We have been handed a linked list. It is claimed it does not loop. But if we are to send in our troops, we better darn well make sure. Assumption is the mother of all, remember!?! It is your job to find a simple algorithm to check this linked list on loops. Ladies, O(n²) will not suffice. (Seriously, try it before reading on. Hint, the photo on the left shows Bob Floyd rendered using Floyd-Steinberg dithering.) Ladies, your mission is simple yet dangerous. The enemy is upon us … maybe. We have been handed a linked list. It is claimed it does not loop. But if we are to send in our troops, we better darn well make sure. Assumption is the mother of all, remember!?! It is your job to find a simple algorithm to check this linked list on loops. Ladies, O(n²) will not suffice. (Seriously, try it before reading on. Hint, the photo on the left shows Bob Floyd rendered using Floyd-Steinberg dithering.) |

|

“This solution is Floyd’s Cycle-Finding Algorithm as published in Non-deterministic Algorithms by Robert W. Floyd in 1967. It is also called The Tortoise and the Hare Algorithm.“ (Stephen Ostermiller) [Finding a Loop in a Singly Linked List] “This solution is Floyd’s Cycle-Finding Algorithm as published in Non-deterministic Algorithms by Robert W. Floyd in 1967. It is also called The Tortoise and the Hare Algorithm.“ (Stephen Ostermiller) [Finding a Loop in a Singly Linked List]

Trivia: Actually this solution is not published in the above mentioned article [pdf], apparently Donald Knuth credits Floyd for the algorithm, but without citation. Since nobody influenced Knuth more than Bob Floyd [pdf], they might have simply discussed it and Knuth put it in The Art of Computer Programming II. |

Archive for the ‘CodeAlgorithms’ Category

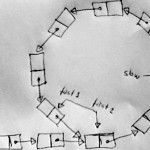

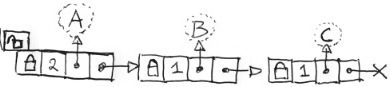

We have all been there. Multiple threads, many objects, and than you realize you need per-object locking. Maybe you should add a mutex to each and every object, but there are many objects and collisions are rare. So, you should use a linked list with a 4-tuple node: {mutex, count, &object, &next}. Before you use an object, lock the list, see if the object is in any node: if yes, increment the count, if no, create a node with a count of 1. In either case get a pointer to the mutex and unlock the list. Now lock the node, using the pointer to the mutex and do it to the object. After which you lock the list again and either decrement the count or delete the node. Implementing that won’t be hard, just follow the doodle  you just made while inserting imaginary objects

you just made while inserting imaginary objects A, B, A, and C (on the right). But you feel you can do better: collisions of the same object are rare but collisions of unrelated objects run rampant. You realize locking and searching the list might become a bottleneck.  How about chopping the linked list into chunks with a hash array? You doodle some more (on the left). A little more complex, but doable. Given a large enough hash array, the list chunks will be seldom longer than one.

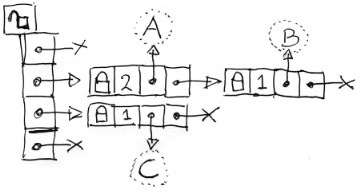

How about chopping the linked list into chunks with a hash array? You doodle some more (on the left). A little more complex, but doable. Given a large enough hash array, the list chunks will be seldom longer than one. ![]() Than you realize that you can give each list chunk its own mutex. And than you get that special feeling. You know the one I mean. Utter happiness hits you: Why not just fill the hash array with mutexes only? You can’t help but drawing it out (to the right), just to prolong that nice feeling. What a world, what a life, you are in love. Well, maybe not “in love” but your ventral tegmental floods your caudate with dopamine like there is no tomorrow. If you read this far, you know I am not joking.

Than you realize that you can give each list chunk its own mutex. And than you get that special feeling. You know the one I mean. Utter happiness hits you: Why not just fill the hash array with mutexes only? You can’t help but drawing it out (to the right), just to prolong that nice feeling. What a world, what a life, you are in love. Well, maybe not “in love” but your ventral tegmental floods your caudate with dopamine like there is no tomorrow. If you read this far, you know I am not joking.

|

|

Randomness is really not compatible with the human mind. We see order in everything. Only homogeneous distributions feel random to humans. If you role a dice three times and it comes up sixes, you (and me) either suspect the dice is weighted or the next throw, for sure, is going to be not-six. Don’t deny it, random must be “fair.” Look at the dots in the scatter plots above, which is schemed and which is random? Sometimes a whole paper is needed to convince scientist that distributions are just random and that there is no underlaying scheme. Look for example at [Clustering of the Cosmic Ray Ages of Stone Meteorites] (Andrew S. Tanenbaum).

To accommodate human feelings some form of control over the randomness is needed. “The way to handle controlled randomness is actually pretty simple. It’s commonly called a shuffle bag.” (Sean McArthur) [A Less-Random Generator] Almost all successful games use random control like that.

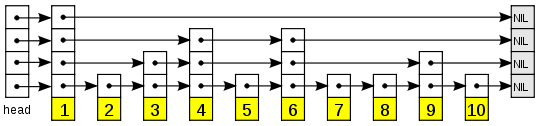

The skip list is a relative unknown data structure. If you know it already you can stop reading. If not, keep reading because “the algorithms for insertion and deletion in skip lists are much simpler and significantly faster than equivalent algorithms for balanced trees.” (William Pugh) [Skip lists: a probabilistic alternative to balanced trees]. Speaking from experience, I have to say this is true. Coding up skip lists is easy. I’ve written a reentrant version that uses read/write locking, a version for a hash table to replace chaining, a version that allows Θ(log(n)) indexing, and an unrolled version. It is not perfect for all problems, but it is adaptable and has some other nice properties. However, I like it best for its simplicity. To find the

The skip list is a relative unknown data structure. If you know it already you can stop reading. If not, keep reading because “the algorithms for insertion and deletion in skip lists are much simpler and significantly faster than equivalent algorithms for balanced trees.” (William Pugh) [Skip lists: a probabilistic alternative to balanced trees]. Speaking from experience, I have to say this is true. Coding up skip lists is easy. I’ve written a reentrant version that uses read/write locking, a version for a hash table to replace chaining, a version that allows Θ(log(n)) indexing, and an unrolled version. It is not perfect for all problems, but it is adaptable and has some other nice properties. However, I like it best for its simplicity. To find the 7 in the above skip list, we start at the top left, and go right until we hit a value >7 (or NIL). We go down one and continue to the right, and so on. So we visit the nodes 1, 4, 6, and 7. Search usually is Θ(log(n)). Since the reentrant version is somewhat less trivial to write, click this link for a GPLed implementation.

In 1992, I coded the first incarnation of yellow pages on-a-map, CityDisc, the brainchild of Ben Hoefnagels, a great guy and visionary. I really liked to work for him. He would tell me what he wanted, and let met code. In those days, software had to run on a 6 MHz Intel 80286 from a 720kB floppy disc. I explained to Ben than in order to fit 3000 streets (and 30.000 addresses) on one floppy, we should use vector graphics. So Ben told me: “OK, go do it!” This first prototype was rather slow. So Ben told me: “Make it faster!” Profiling showed that 92% of the run time was consumed by one function:

In 1992, I coded the first incarnation of yellow pages on-a-map, CityDisc, the brainchild of Ben Hoefnagels, a great guy and visionary. I really liked to work for him. He would tell me what he wanted, and let met code. In those days, software had to run on a 6 MHz Intel 80286 from a 720kB floppy disc. I explained to Ben than in order to fit 3000 streets (and 30.000 addresses) on one floppy, we should use vector graphics. So Ben told me: “OK, go do it!” This first prototype was rather slow. So Ben told me: “Make it faster!” Profiling showed that 92% of the run time was consumed by one function: sqrt(). Small wonder, coding vector rendering is all about Pythagoras. For hours I, fruitlessly, tried to make a fast my_sqrt(). Then I moved the problem up one layer of abstraction. If I could not speed up sqrt(), I might find a way to speed up the function that used it: the length() function. On a hunch, I tried:

#define length(x,y) ((x > y) ? (x + y/3) : (y + x/3))I hit <Ctrl><F9> (Yes, Turbo C.) and BANG there was the map, almost instantaneous. I never forgot that lesson: Problems can be hard on one level of abstraction but easy on an other.

“File-level deduplication has the lowest processing overhead but is the least efficient method. Block-level dedupe requires more processing power, and is said to be good for virtual machine images. Byte-range dedupe uses the most processing power and is ideal for small pieces of data that may be replicated and are not block-aligned, such as e-mail attachments. […] ZFS provides block-level deduplication, using SHA256 hashing, and it maps naturally to ZFS’s 256-bit block checksums. The deduplication is done inline […]” (Chris Mellor) [ZFS gets inline dedupe]

“File-level deduplication has the lowest processing overhead but is the least efficient method. Block-level dedupe requires more processing power, and is said to be good for virtual machine images. Byte-range dedupe uses the most processing power and is ideal for small pieces of data that may be replicated and are not block-aligned, such as e-mail attachments. […] ZFS provides block-level deduplication, using SHA256 hashing, and it maps naturally to ZFS’s 256-bit block checksums. The deduplication is done inline […]” (Chris Mellor) [ZFS gets inline dedupe]

“Good for virtual machine images.” I’d like to nominate this remark for the understatement of the year contest. Note it would also be great for vectorized OLAP. Also note that compression engines like LZW use “byte-range dedupe” on a data stream. … Implementing byte-range dedupe on a random access system won’t be easy. … Besides performance might suffer, if the contents of every block is expressed in terms of other blocks. … Probably a dynamic dictionary is worth a try. … Somebody stop me!

with x( s, ind ) as

( select sud, instr( sud, ' ' )

from ( select

'53 7 6 195 98 6 8 6 34 8 3 17 2 6 6 28 419 5 8 79'

sud from dual )

union all

select substr( s, 1, ind - 1 ) || z || substr( s, ind + 1 )

, instr( s, ' ', ind + 1 )

from x

, ( select to_char( rownum ) z

from dual

connect by rownum <= 9

) z

where ind > 0

and not exists ( select null

from ( select rownum lp

from dual

connect by rownum <= 9

)

where z = substr( s, trunc( ( ind - 1 ) / 9 ) * 9 + lp, 1 )

or z = substr( s, mod( ind - 1, 9 ) - 8 + lp * 9, 1 )

or z = substr( s, mod( trunc( ( ind - 1 ) / 3 ), 3 ) * 3

+ trunc( ( ind - 1 ) / 27 ) * 27 + lp

+ trunc( ( lp - 1 ) / 3 ) * 6

, 1 )

)

)

select s

from x

where ind = 0

/

(Anton Scheffer) [Solving a Sudoku using Recursive Subquery Factoring]

Impressive code from our amici over at Amis. But also an impressive example of how far one can go outside the original domain of a language. I mean recursive queries? On the other hand this algorithm does not look too good in Scala or in Perl either. The mathematics of Sudoku are studied in depth. Sudoku has been shown to be NP-complete and of the many ways of solving Sudoku, dancing links and constraint programming seem to be very popular. (Typically, it takes milli seconds for a computer to solve a Sudoku.)

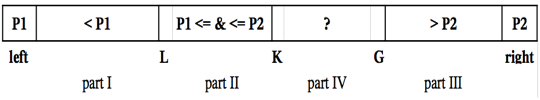

“The new Quicksort algorithm uses partitioning a source array T[ ] a, where T is primitive type […], to three parts defined by two pivot elements P1 and P2 (and therefore, there are three pointers L, K, G, and left and right […]) shown [below]:

It is proved that for the Dual-Pivot Quicksort the average number of comparisons is 2*n*ln(n), the average number of swaps is 0.8*n*ln(n), whereas classical Quicksort algorithm has 2*n*ln(n) and 1*n*ln(n) respectively.“ (Vladimir Yaroslavskiy) [paper, link, Thanks John.]

It is proved that for the Dual-Pivot Quicksort the average number of comparisons is 2*n*ln(n), the average number of swaps is 0.8*n*ln(n), whereas classical Quicksort algorithm has 2*n*ln(n) and 1*n*ln(n) respectively.“ (Vladimir Yaroslavskiy) [paper, link, Thanks John.]